Will the EU AI Act Affect Your E-Shop in 2026? (Probably Yes)

Wait, do I need a lawyer now?!

If you run an online store and heard whispers (or panicked Slack messages) about the new EU AI Act, you’re probably wondering if this is something serious or just another regulation no one enforces.

Here’s the short answer: if your e-shop uses AI in any way—like a chatbot, product recommendations, or automated support you need to pay attention.

But don’t worry we’re not here to bore you with 40 pages of legal jargon. This guide is built for real businesses: short, clear, practical, and maybe even a little fun. We’ll break down what the EU AI Act means for e-commerce, what you actually need to do, and how to stay on the right side of the law (without turning into a part-time lawyer).

What Is the EU AI Act? (No Legal Jargon, I Promise)

Think of the EU AI Act like the GDPR for artificial intelligence — but instead of cookie banners, we’re talking chatbots, recommendations, and anything that talks like a machine.

So... What Is It?

The EU AI Act is a new law from the European Union, designed to make sure artificial intelligence doesn’t go rogue.

It was officially passed in 2024 and will start applying in stages from 2026. But heads up — the rules you’ll need to follow depend on how you’re using AI.

It’s not one-size-fits-all. It’s more like:

“If your AI tool can mess with people’s lives, you’ve got some paperwork. If it just helps them find the right shoes faster, you’re probably fine.”

Learn more about how the EU defines and explains the goals of the AI Act in this official overview.

EU AI Act Risk Levels Explained (from "Totally Fine" to "Absolutely Not")

The EU AI Act is built on a risk-based approach. The law splits AI tools into four categories. That means not all AI is treated equally — just like a store alarm doesn’t go off when someone tries on shoes, but definitely does if they sprint out with a TV.

Here’s the EU’s AI risk scale:

- 🟢 Minimal risk

Are you using AI to filter spam, suggest products, or auto-fill support responses? Congrats — no crazy rules. You’ll just need to be honest about it — like letting users know your chatbot isn’t secretly Dave from accounting. - 🟡 Limited risk

Still pretty chill, but with transparency obligations. You’ll need to be transparent about using AI — e.g. label it, don’t pretend it’s human, keep users informed. For example: AI writing product descriptions, generating content, or helping answer support tickets. - 🟠 High risk

This is where things get serious. If your AI helps screen job candidates, predict user behavior in a way that affects pricing or access, or uses biometric data, you’ll need to comply with stricter regulatory requirements. Think: risk assessments, human oversight, and yes, more paperwork than you'd like. - 🔴 Unacceptable risk

These are banned entirely. No discussion. - AI that scores people based on social behavior

- Facial recognition in public spaces

- Emotion-reading tools in schools or the workplace

- AI that manipulates or exploits vulnerable users

If you’re not doing any of the above: great. Keep it that way.

Okay, But What Kind of AI Does This Actually Apply To?

You might be thinking:

“Okay, I get the risk levels — but does that include my chatbot? What about that AI tool I use for pricing?”

Fair questions. The truth is, the EU AI Act doesn’t care what the tool is called — it cares about what the tool does. If it uses “machine-based logic” to make decisions or generate responses, it likely falls under the law.

Here’s a quick overview of the most common AI tools used in e-commerce — and what the Act means for each one:

This Mazars analysis offers more examples of how different types of AI tools fall into each risk level.

Is My E-Shop Affected? 3 Questions to Find Out Fast

Let’s be honest — most e-shops aren’t deploying Terminators. But if you’re using AI anywhere in your customer journey, the new EU AI Act probably applies to you. Don’t worry, here’s how to tell what (if anything) you need to do.

1. You’re Just Using Google Analytics (or Similar Tools)

Examples: Google Analytics, Hotjar, standard performance tracking

🔍 Risk Level: Minimal

📋 Compliance Requirements: None — you’re outside the scope of regulated AI systems

No worries. If your site is simply tracking visitors, counting conversions, or running basic retargeting campaigns with pre-built tools like Google Analytics, you're likely in the “minimal risk” zone. As long as you're not crossing into profiling or automated decision-making, you’re safe.

What this means for you:

- No big changes.

- Just keep doing what you’re doing — ethically and transparently.

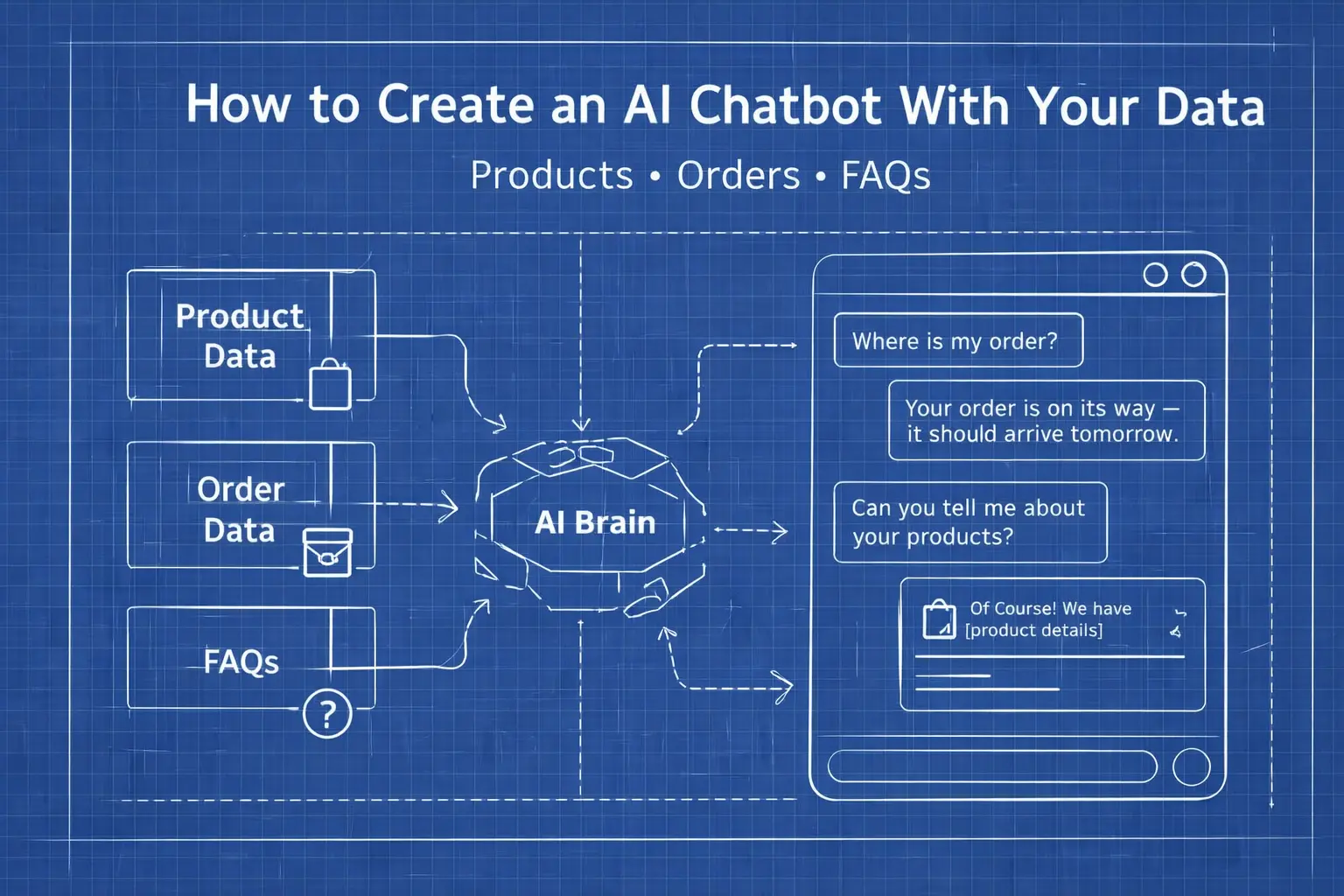

2. You Use AI Chatbots or Product Recommendations

Examples: AMIO’s AI Web Chat, personalized suggestions, automated replies

🔍 Risk Level: Limited

📋 Compliance Requirements:

- Transparency obligations – inform users they’re interacting with AI

- Ensure the AI doesn’t mislead or manipulate users

- Respect basic user rights (consent, data protection, etc.)

If your chatbot answers “Where’s my order?”, you’re likely fine. If it starts denying refunds based on tone of voice, we need to talk. These systems fall under limited-risk AI in the EU AI Act and that means a few small but important obligations.

What this means for you:

- You need to tell users they’re interacting with AI

- Make sure the AI doesn’t mislead or manipulate

- Keep an eye on accuracy — if your chatbot starts recommending microwaves to someone buying sandals, you’ve got a problem (and possibly a refund)

3. You’re Building Custom AI or Using Sensitive Data

Examples: In-house AI models; tools that adjust prices per user; systems using biometric data (like facial recognition)

🔍 Risk Level: High

📋 Compliance Requirements:

- Risk assessment and documentation

- Built-in human oversight mechanisms

- Possibly a formal conformity assessment

- Clear user communication and fail-safes

Now we’re talking high-risk territory. These are classified as high-risk AI systems due to their potential to affect consumer rights, pricing, or access.

They fall under stricter governance rules — and if misused, they can violate the fundamental rights impact assessment criteria set by the Act.

Don’t panic — but don’t ignore this either. The earlier you prep, the less you’ll pay later (figuratively and literally).

What this means for you:

- You’ll likely need to run a risk assessment

- Set up human oversight measures

- Possibly go through a conformity assessment (yes, that’s a real thing)

How Big Are the Fines If You Mess This Up?

Here’s where the EU gets serious.

Depending on how badly you mess up and what kind of AI you’re using the penalties can be... well, very GDPR-esque.

💸 The fine print (literally):

- Up to €35 million or 7% of annual global turnover for the worst violations

(e.g. using banned AI like emotion detection in hiring or facial recognition without consent) - Up to €15 million or 3% of turnover for other serious violations (e.g. not complying with high-risk requirements)

- Smaller fines (up to €7.5 million) for failing transparency obligations in limited-risk cases

So yes — even “just a chatbot” could get expensive if it’s misleading customers or pretending to be human.

For a legal deep dive into fines, and regulatory requirements, see this this expert analysis from WilmerHale.

EU AI Act Compliance: 6 Steps for E-Commerce Teams

We get it — you’re running an online store, not studying EU law in your spare time. But staying compliant with the EU AI Act doesn’t have to be a nightmare (or require a team of in-house lawyers). Here’s how to stay on the safe side without tanking your to-do list.

1. Know Where You Use AI

Start by making a simple list:

- Do you use a chatbot on your homepage?

- Personalized product recommendations?

- AI-generated product copy or marketing emails?

- Automated replies?

- Dynamic pricing?

If yes to any of these, congratulations, you’re using AI-based systems. That means the law might apply. Awareness is step one.

2. Understand the Risk Level

Most e-commerce AI falls under limited risk — which means you need to be transparent but you won’t need a mountain of paperwork.

But If your AI uses personal data to predict behavior, adjust pricing, or categorize users, it could move into high-risk territory and that means more documentation, human oversight, and possibly a conformity assessment.

👉 Tip: If you’re not sure, ask your AI provider or check if your use case appears in the EU AI Act’s high-risk category list (keywords like credit scoring systems, emotion recognition and biometric categorization = red flags).

3. Update Your Privacy Policy

Yes, really. One of the key transparency obligations in the EU AI Act is telling users how AI is used, what it does, and what kind of data it uses.

Make sure your privacy policy explains:

- That you use AI (and where)

- What kind of logic does it use (e.g. “This tool suggests products based on previous browsing”)

- What data it processes

- Whether they can opt out or reach a human

And remember — no shady surprises. The more open you are, the safer you are.

4. Tell Customers They’re Talking to a Bot

If a customer is chatting with AI, they have a right to know. This isn’t just good UX, it’s a legal requirement under the Act.

Add a line like:

“Hi! I’m an AI assistant here to help. You can ask to speak with a human anytime.”

If your AI offers recommendations or answers FAQs, you’re fine. If it starts making decisions about the customer (like approving refunds or filtering queries), make sure there’s human oversight available too.

5. Pick the Right AI Partner (This One’s Huge)

If you’re not building AI yourself (and most e-shops aren’t), then your provider is your compliance partner.

Ask them:

- Is your system compliant with the EU AI Act?

- How do you handle transparency, human oversight, and data privacy?

- Can you help us prove compliance if needed?

💡 Pro tip: AMIO already builds in transparency prompts, human fallback options, and privacy-by-design logic — so your chatbot or automation tool won’t get you into trouble.

6. Document Everything (Seriously, Just Keep a Folder)

Keep basic records:

- What AI tools you use

- What risk category do they fall under

- What steps you’ve taken to stay compliant

- Any updates to policies or user notices

It’s called a continuous risk management system and while that sounds intense, for most e-shops it’s as simple as keeping a Google Doc updated.

Staying compliant is about knowing what you use, being clear with your users, and working with providers who take this seriously. You don’t need a legal team. You just need a plan. And maybe a chatbot that knows when to escalate.

AI Act Compliance: You’re Probably 80% There (Here’s the Last 20%)

Let’s recap: yes, the EU AI Act is a big deal. But no, it doesn’t mean you have to unplug your chatbot or stop using your automation tools.

If you’ve made it this far, you already understand where you use AI and know what risk category it falls under. You’re already 90% there.

So what’s the final stretch?

Use an AI provider that’s already one step ahead

The fastest way to reach full compliance isn’t to build your own legal playbook — it’s to partner with a platform that’s done the work for you. That’s where AMIO comes in.

- We’ve baked transparency, human oversight, and user controls directly into the platform.

- We provide a ready-made compliance statement you can share with auditors, regulators, or even just your boss.

- And we stay ahead of evolving rules, so you don’t have to constantly re-check the fine print.

Using AMIO? You’re AI Act Ready.

Great news: You can reference our official statement and show that you’re aligned with the EU AI Act without lifting a finger. Our compliance statement is ready to share with no extra setup needed.

🔗 View AMIO’s EU AI Act Compliance Statement →

Print it, bookmark it, forward it to legal, or just know that you’ve got backup if someone ever asks “Are we even compliant with this?”.

Not a Fan of Regulations? You’ll Like This Ending.

Let’s be honest, AI compliance isn’t why you got into e-commerce. You’re here to build, grow, and serve customers, not decode EU legislation.

But here’s the good news: if you understand the basics, ask the right questions, and choose the right platform, compliance becomes just another thing on your to-do list, not a massive burden. It’s like checking the expiry date before making a smoothie - most of the time it’s fine, but skip it once and you’ll remember forever.

So here’s your takeaway:

- Know where your AI lives

- Label your bots

- Don’t build anything that reads emotions (seriously, just don’t)

And the next time someone brings up the EU AI Act at work or over coffee, you’ll just nod and say: “Yeah. I read the one article that actually made sense. We’re good.”

📌 Bonus: Who’s Behind This Article?

This guide was brought to you by AMIO — a messaging automation platform that helps e-commerce brands connect with customers smarter, faster, and with less stress.

We build AI tools with privacy, compliance, and actual business goals in mind. So if you’re looking for a chatbot that respects EU rules and knows how to handle “Where’s my order?”, we should talk.

🔗 See how AMIO helps e-shops automate customer support

FAQ: EU AI Act for E-commerce Brands

1. Does the EU AI Act apply to small e-commerce stores?

Yes — the EU AI Act applies even to small e-commerce businesses if they use AI tools like chatbots, recommendations, or dynamic pricing.

2. Are AI chatbots regulated under the EU AI Act?

Yes. Chatbots are considered AI systems. If they interact with customers, automate responses, or use data to influence decisions, they must meet transparency rules.

3. What are the fines for non-compliance with the EU AI Act?

The fines can go up to €35 million or 7% of global turnover —depending on the severity of the violation.

4. What do I need to do if I use an AI chatbot?

Audit your AI tools. Make sure they’re safe, transparent, and under control. That’s the first step to compliance.

5. What is considered a high-risk AI system in e-commerce?

High-risk AI includes tools that profile users, adjust pricing per person, or use biometric or sensitive data in decision-making. Most e-commerce chatbots are not high-risk but it depends on how they’re used.

6. Do I need to update my privacy policy because of the EU AI Act?

Yes. You must clearly state how AI is used on your site and how it affects users’ data.

7. Do I need to register my AI system somewhere?

Only high-risk systems require registration or formal conformity steps. Most basic e-commerce tools don’t fall into that category.

8. When does the EU AI Act start applying?

The Act takes effect in phases from 2026 but parts of the law are already active now.

9. How can I make sure my AI chatbot is compliant?

Use a trusted provider that meets EU AI Act guidelines. AMIO, for example, ensures full compliance and gives you full control over messaging.

10. Where can I read the full text of the EU AI Act?

You can download the official PDF version here.

11. Do non-EU e-shops need to care?

Yes. If you sell to EU customers, the rules apply to you too. Even if you’re based in the Bahamas. No loopholes here!

Book a 30-minute session where we will find out how AI bot can help you decrease call center costs, increase online conversion, and improve customer experience.

Book a demo